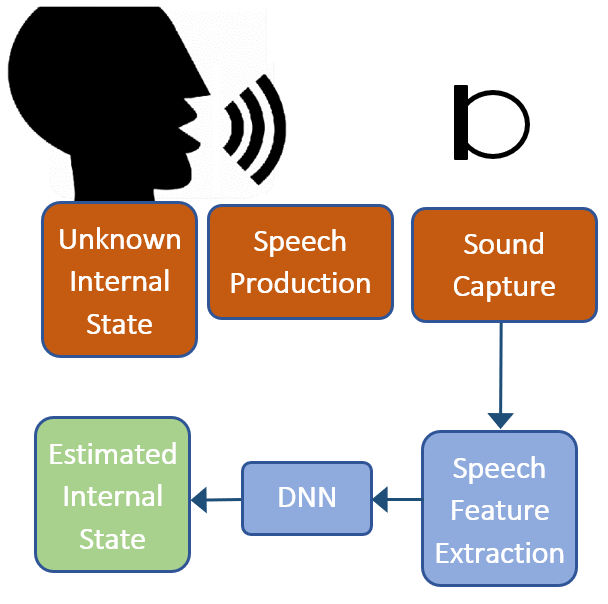

Speech can be used as a biomarker for a person’s internal state. When we are talking with another person, there are visual and auditory cues that help us understand the physical and mental state that they may be in. The circumstances of the past few years have changed how we interface with our health care providers. Telemedicine has become more commonplace for safety and convenience reasons, but these subtle cues are loss in the communication. A system that is able to identify a person’s state from the captured speech signal would be a useful tool to health care providers, and emergency dispatchers.

Is a person in pain, are they depressed, are they fearful, are they suffering from dementia? The speech signal can be decomposed to provide insights on these questions. The following biomarkers can be used:

- Speech Intensity

- Fundamental Frequency (F0) floor

- F0 variability and range

- Sentence contours

- High Frequency energy

- Articulation Rate

- Harmonic to Noise Ratio

For example, when a person is in pain, they tend to increase the intensity and F0 of their voice. Depending the type of pain or the person, words may be extremely drawn out, or they may speak very rapidly. When a person is depressed, the intensity and F0 of their voice is decreased. They speak more slowly and monotonously. The question now is how do we use this information to accurately classify a person’s internal state. The answer is deep neural networks (DNN). A properly trained network can either take a raw waveform or an extracted set of speech features and make an estimated classification on the internal state.