Stochastic Resonance (SR) is a phenomenon where noisy signals can be enhanced by adding extra noise and feeding them through a bi-stable system. For example, the noisy signal x(n) can be enhanced by solving

y‘(n) = ay(n) – by(n)3 + x(n) + ξ(n)

for the unknown signal y(n), where ξ(n) is Gaussian white noise with variance σ2. This is inherently a continuous system that needs to be approximated in discrete time in order to be applicable.

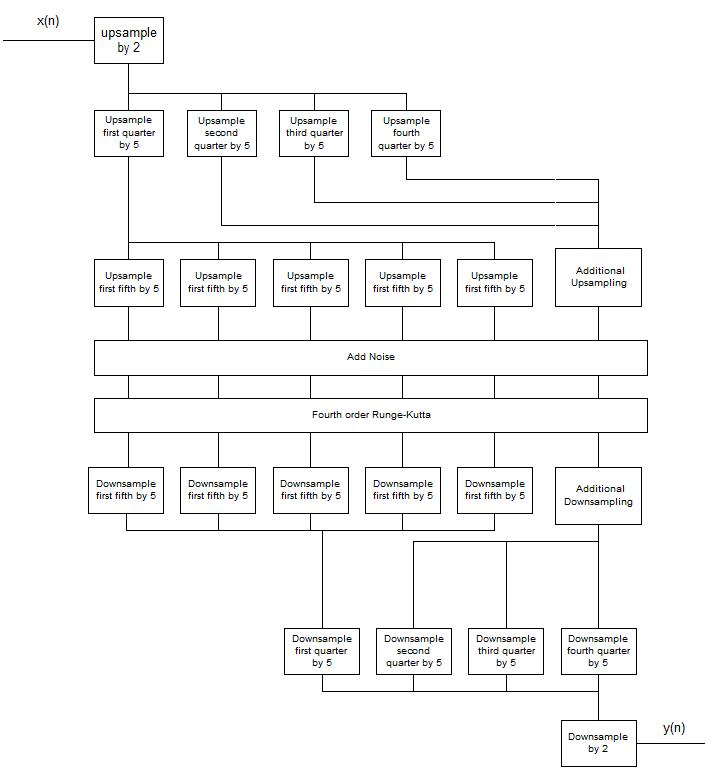

The first issue to be addressed is that in order for SR to occur, the signal needs to be oversampled by a factor of 50: i.e, we can only expect to enhance components of the signal with periods at least 100 samples. In order to detect frequencies up to the Nyquist limit, we will need to upsample our original signal by a factor of 50. Since this will take a reasonable block of the original signal, say 100 samples, to an unmanageable size, 5000 samples, there needs to be a method to prevent the excessive use of memory. This can be accomplished by cascading upsamling filters as follows: upsample 100 samples by a factor of 2 to 200 samples. For each group of 50 samples, upsample by a factor of 5 to 250 samples. For each of the groups of 50 from the 250 samples, upsample by a factor of 5 and perform the stochastic resonance. After performing the stochastic resonance, we can downsample in a similar cascading manner to return to the original sampling frequency.

We also need to consider how to construct the actual solution of the nonlinear differential equation

y‘(n) = ay(n) – by(n)3 + x(n) + ξ(n)

based on our discrete oversampled signal. This can be accomplished by applying the fourth order Runge-Kutta method. This method splits the interval from n to n + 1 into 4 subintervals, and approximates the value of y‘ on each interval using the differential equation. Specifically, we have

| k1 = h[ay(n) – by(n)3 + x(n) + ξ(n)] |

| k2 = h[a(y(n) + ½k1) – b(y(n) + ½k1)3 + x(n) + ξ(n)] |

| k3 = h[a(y(n) + ½k2) – b(y(n) + ½k2)3 + x(n + 1) + ξ(n + 1)] |

| k4 = h[a(y(n) + k3) – b(y(n) + k3)3 + x(n + 1) + ξ(n + 1)] |

| y(n + 1) = y(n + ⅙(k1 + 2k2 + 2k3 + k4) |

where h is the time difference between n to n + 1, which for voice coding at 8 kHz, would be 2.5ns.