Acoustic Echo Cancellation: Principles, Challenges, and Advanced Techniques

Abstract

This white paper explores acoustic echo cancellation (AEC) techniques in human-machine interface systems, including applications such as social robots. AEC is crucial for suppressing unwanted echoes in audio processing environments, particularly when multiple loudspeakers and microphone arrays are involved. Following established methodologies as outlined previous work and litreture1, this paper seeks to present challenges associated with multichannel AEC, including time-variance of echo paths, reverberation, loudspeaker signal correlation, and double-talk scenarios.

Introduction

Acoustic human-machine interfaces often involve a setup with multiple loudspeakers and microphone arrays. The primary challenge in such systems is capturing clear speech while minimizing interference from ambient noise, nearby conversations, or echoes originating from loudspeaker signal playback. Addressing these challenges requires the combined application of acoustic echo cancellation (AEC) and adaptive beamforming. While adaptive beamforming enhances signal clarity by focusing on the desired sound source, AEC specifically targets the removal of echoes generated when loudspeaker outputs are detected by microphones. This white paper focuses on the principles and challenges of AEC, reserving a detailed discussion of adaptive beamforming for a future white paper publication. Additionally, a forthcoming white paper will outline the potential for integrating both techniques into a unified system for superior performance in dynamic and noisy environments. The integration of AEC and adaptive beamforming into a joint system is an emerging area of research. Such integration can enhance performance in complex acoustic environments, enabling simultaneous echo suppression and directional sound capture. This dual approach is particularly beneficial in dynamic environments where both clear speech and minimal echo are required.

Acoustic Echo Cancellation

Acoustic echo cancellation (AEC) is a key technique used to eliminate unwanted echoes in audio processing systems, particularly in environments with multiple loudspeakers and microphones. This section provides an overview of the fundamental concepts related to multichannel AEC, emphasizing main challenges and practical considerations.

Principle of Multichannel AEC

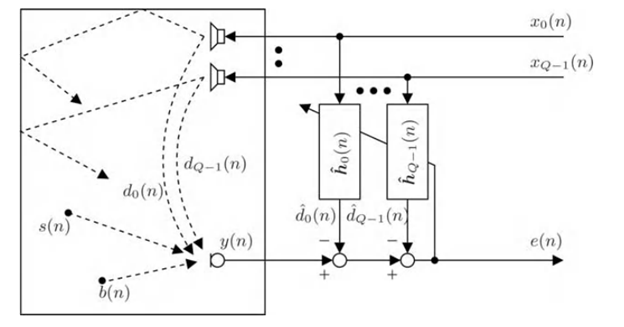

In a multichannel setup, multiple loudspeakers (denoted as Q) produce playback signals, represented as where

. These signals are emitted from the loudspeakers and then picked up by microphones as acoustic echoes, denoted as

. Typically, amplifiers and transducers are assumed to exhibit linear behavior, allowing for a linear model of the echo paths between loudspeaker signals and microphone outputs. However, in instances where nonlinearities are significant, advanced modeling techniques become necessary.

To suppress the echoes, adaptive filters (denoted as ) are implemented in parallel with the echo paths. These filters use the loudspeaker signals as reference inputs to generate echo replicas, labeled as

. By subtracting these replicas from the microphone signals, the system effectively reduces acoustic echoes, framing AEC as a system identification problem involving adaptive linear filtering to model and eliminate echo paths. Figure 1 provides a schematic representation of this process.

Key Challenges in Acoustic Echo Cancellation

Time-Variance of Echo Paths:

Echo paths may vary due to environmental changes or moving sound sources, requiring adaptive algorithms to respond quickly and track changes efficiently.

Reverberation Time:

The reverberation time () varies greatly depending on the environment, ranging from approximately 50 ms in small spaces to over 1 second in large halls. Achieving effective echo suppression (e.g., ERLE ≈ 20 dB) necessitates adaptive filters with thousands of coefficients, especially at higher sampling rates (8–48 kHz). This can slow convergence when echo paths shift. The relationship describing the number of filtering coefficients is given by:

Where ERLE is the echo suppression in dB, is the sampling rate, and $LATEX T_{60}$ is the reverberation time.

Convergence time is a critical factor in AEC, especially in environments where echo paths change rapidly. Fast-converging algorithms are essential to maintain echo suppression during dynamic scenarios, minimizing residual echoes.

Correlation of Loudspeaker Signals:

In multichannel setups, spatial enhancement techniques like weighting and delay adjustments can introduce high auto- and cross-correlation among loudspeaker signals, reducing adaptive algorithm convergence rates. Solutions include prewhitening filters, adding inaudible nonlinearities, or introducing uncorrelated noise.

Double Talk Scenarios:

When speech or ambient noise overlaps with echo signals, adaptive filters may become unstable. Stability is maintained by adaptive control mechanisms that modulate the algorithm’s step size based on acoustic conditions, requiring smaller step sizes when echo-to-disturbance ratios are low, which in turn increases convergence time.

Adaptation Algorithms for AEC

To optimize echo cancellation, algorithms such as NLMS (Normalized Least Mean Squares), affine projection, and RLS (Recursive Least Squares) are commonly used. These algorithms balance computational efficiency and convergence speed. Frequency-domain techniques, such as DFT-domain processing and frequency subband filtering, take advantage of signal sparseness, enabling more efficient adaptation by tailoring convergence speed based on frequency-dependent signal characteristics.

Residual Echo and Noise Mitigation

Even with robust algorithms, residual echoes may remain. AEC systems often combine echo cancellation with noise reduction methods, such as spectral subtraction or Wiener filtering, to address residual echoes and adaptive noise variations, enhancing audio quality. Residual echoes can persist despite adaptive filtering, especially in dynamic environments. Post-processing methods, such as cascading noise reduction filters after AEC, help mitigate these residual signals, improving overall audio clarity.

Conclusion

This white paper has presented the fundamental principles and challenges of acoustic echo cancellation, with a focus on multichannel setups and adaptive filtering techniques. The concepts discussed are informed by established methodologies in the field, including those outlined by previous work and research1. The aim has been to contextualize these methods in a practical and innovative way. Future white papers will address adaptive beamforming and the integration of AEC and beamforming techniques into unified audio processing systems.

References

- G. S. berhard Hänsler, Topics in Acoustic Echo and Noise Control: Selected Methods for the Cancellation of Acoustical Echoes, the Reduction of Background Noise, and Speech Processing, (2006).