This example of noise reduction algorithms uses Welch’s method [1] to create an estimate of the noise-only power spectral density to estimate the a priori signal to noise ratio. A soft-decision voice activity detector is implemented using the a priori estimate in a log-likelihood ratio test as as in [2]. A suppression rule is then derived following the methods of [3] and used in accordance with the voice activity hypothesis to provide an estimate of the clean speech signal.

Introduction

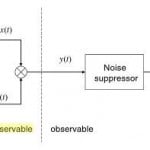

As the figure shows, noise reduction is inherently a signal estimation problem. Let x(t) and d(t) denote the clean speech and noise signals respectively. The observed speech signal is then defi ned as y(t) = x(t) + d(t). That is, the noise signal is assumed to be additive and unncorrelated with the speech signal. Now the observed noisy speech signal is simply a linear combination of the clean speech and noise.

Figure 1: Noise Reduction as Signal Estimation [4]

Thinking about these unobservable signals as random variables, their pairwise uncorrelation then grants us the ability to use linear maps into the other practically important signal domains. For instance, the frequency domain transform results in Y (ω) = (X +D)(ω) = X(ω)+D(ω). Both noise and speech are generally assumed to be N(0, σ) due to the central limit theorem [4], but some processing systems report better results modeling the speech as L(0, σ) [5] or Γ(k, θ) [6].

The figure illustrates, we only have access to the noisy speech signal. Thus, a speech enhancement system’s job is to accurately estimate these unobservable signals. To do this, it is of utmost importance to accurately estimate the noise power spectral density because it is needed to define a posteriori signal to noise ratio (SNR), to estimate the a priori SNR, and through those two estimators, derive the system’s suppression rule G. Thus, the quality of the entire system is dependent on the accuracy of the Noise Power Spectral Density Estimator.

Noise Power Spectral Density Estimation

The first and most important major block in a speech enhancement system is the Noise Power Spectral Density Estimator. Ideally, we would be able to sample the noise signal continuously in real time, however, in practice we must sample the noise PSD at discrete time intervals, and thus we need to derive a scheme to update the noise PSD as the speech is being processed. A variety of update schemes have so far been proposed in the literature.

The earliest methods simply assumed a pause in speech as in [7], resulting in the observed signal being only noise. From this noise only data, they could then estimate the PSD. When another noise only frame was detected, the noise PSD could be updated.

The next advancement came from the Minimum Statistics approach [8], which works from the assumption that the minimum power level in any given frequency bin when observed over a sufficiently long period represents the power in the noise signal. Therefore, we no longer need to wait for a pause in speech to update the PSD estimate. Instead, we can just look for the minimum power level within a frame.

Most recently, subspace approaches have invaded the frequency domain, and we now have Noise Tracking using DFT Domain Subspace Decompositions [9]. This approach appears better at tracking non-stationary noise than Minimum Statistic, as it does not need to search the entire frame for the minimum power. It also seems to be better at following noise PSD changes when speech is consistently present in a bin due to its subspace nature.

Welch’s Method

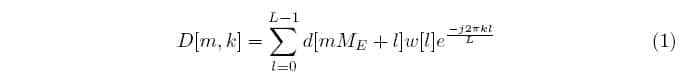

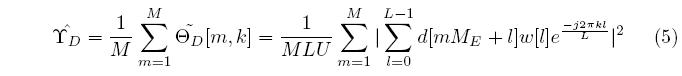

Assuming a pause in speech, our example uses Welch’s method [1] of utilizing a modified averaged periodogram as an estimate of the true PSD. To obtain this estimate, first map the noise signal d into the frequency domain using a short-time Fourier transform. For the mth frame, the kth frequency bin coefficient is computed as:

Where L is the STFT size, ME = xL, x ϵ [0, 1] denotes the frame shift factor, and w represents the window function.

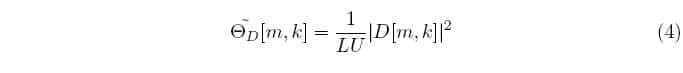

Use a smoothly tapered window to avoid introducing frequency domain artifacts and set the overlap between frames anywhere from 25-75%. More overlap will reduce spectral leakage and provide better frequency resolution, but will sacrifice algorithmic speed and simplicity. Now we can define the periodogram by:

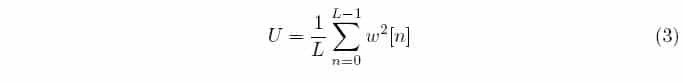

Since windowing attenuates the signal at both ends, it reduces the overall signal power. This so called Coherent Power Gain results in a DC bias for all the DFT frequency bins, which causes the spectral amplitudes to no longer reflect the amplitudes in the time domain preimage. To ensure the windowed signal spectrum is accurately represented, we normalize the power of the window via the window normalization factor U:

We have now arrived at Welch’s modified periodogram, which can be expressed as:

Finally, we average across frames to obtain Welch’s averaged modified periodogram, which is the estimator for the PSD:

It should be noted that this method is justified, as the periodogram is an asymptotically unbiased estimator of the PSD since:

Signal to Noise Ratio Estimation

The next stage in a speech enhancement system is the calculation of the a posteriori signal to noise ratio and the estimation of the a priori signal to noise ratio. The SNRs always define the system’s suppression function, which is used to clean up the speech signal by attenuating the noise, and so it is important to have an accurate result from these computations. The a posteriori SNR calculation is straightforward, however since we cannot observe the clean speech signal, accurate a priori SNR estimation is not a trivial task.

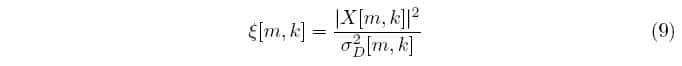

The a posteriori SNR is just the ratio the noisy speech signal’s power to the noise signal’s power. It is per frequency bin in order to provide a dense wideband suppression rule. The local a posteriori SNR is defined as:

While the frame a posteriori SNR is defined as:

The a priori SNR is the ratio of the clean speech signal’s power over the noise signal’s power. The local a priori SNR is defined below:

The frame a priori SNR is defined similarly as (8). Since we cannot observe the clean speech signal, we need to utilize an estimator of the a-priori SNR. There are various schemes to do this, but it all started in 1984 when Ephraim and Malah [10] first derived a real time a-priori SNR estimator. In our example, this is the estimator used.

Ephraim and Malah

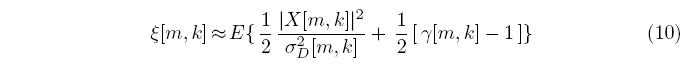

Generally, the SNR can only be updated when the PSD is updated, and so Ephraim and Malah devised a recursive process to estimate and update the a priori SNR. To obtain this estimate, they simply combined the two different SNR measures which results in:

Where γ[m, k] – 1 is another definition of the local a priori SNR. In order to use γ[m, k] – 1 as the local a-priori SNR, we must ensure that it is always positive as it may be the case that γ[m, k] ≤ 1. To accomplish this, we introduce the Heaviside step function H[.] defined as:

Thus we have:

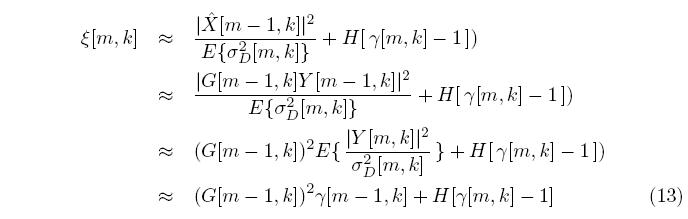

Since the frame size is much smaller than the length of the speech sound, we can assume quasi-stationarity in the speech signal. Expressing this mathematically, we let E {|X[m, k] |2} = | [m – 1, k] |2, where | [m – 1; k] |2 is the previous frame’s clean speech power estimation. Adding the assumption of quasi-stationarity for the noise signal allows us to utilize the same trick for |Y [m, k] |2 and σ2D [m, k]. To calculate | [m – 1, k] |, we use the system’s suppression rule. In other words, we write | [m – 1, k] |= |G[m –1, k]Y [m –1, k] | where G is the suppression function. Putting this all together:

Therefore, we have an estimate of the a-priori SNR as a linear combination of the a posteriori SNRs of the previous frame and the current frame. To introduce some flexibility into the estimation, we introduce a weight α ϵ [0, 1] and arrive at our final equation for the a priori SNR estimator:

Notes

The weight is chosen according to the level of stability your algorithm requires. You can effectively filter out disrupting transients arising from abrupt changes in the current frame’s a posteriori SNR by choosing α ~ 1. In effect, you are then weighting the previous frame’s statistics more highly and thus introducing a frame lag for stability. In the presence of highly non-stationary noise, it might be better to choose α ~ 0 to weight the current frame’s statistics more highly so that changes in the SNR can be better tracked.

Notice how all SNRs depend on the noise signal’s power, which illustrates that these measures’ accuracy can be affected by poor tracking of the noise signal’s non-stationary behavior. If these measures are not accurate, then the suppression rule will not be accurate either, and the system’s ability for speech enhancement will be compromised. Therefore, it is of optimal importance to always have an accurate estimator of the true noise PSD.

Voice Activity Detector

Classically, the SNRs were used to determine the presence of speech in an algorithm called a Voice Activity Detector (VAD) [4]. A VAD is an algorithm that decides on the presence or absence of speech in a given signal frame. A statistical-based VAD compares some function of the SNRs called the likelihood ratio to a threshold value. If this likelihood ratio exceeds the threshold, one hypothesis on the presence of speech is taken to be true, while the other is if the likelihood ratio falls short.

If the VAD decides that speech is absent, then a noise only frame has been found, and the noise PSD can be updated. The need for a VAD comes from the nonstationarity of the noise signal. Once one estimate of the noise PSD is computed, it cannot be assumed that the noise will forever be described by this PSD. Indeed, the changing statistics of the noise as a function of time demand accurate time domain tracking of the noise PSD to ensure proper quality of the enhanced speech signal.

Log-Likelihood VAD

To create the VAD, we suppose two hypotheses:

H0: Speech Absent => Y [m, k] = D[m, k]

H1: Speech Present => Y [m, k] = X[m, k] + D[m, k]

Then the likelihood ratio is defined as:

Taking the logarithm gives us the log-likelihood ratio:

A decision rule is created by then comparing the geometric mean of the likelihood ratio across all frequency bins to a threshold η:

Where:

H0 := Ψ < η

H1 := Ψ ≥ η

When H0 is satisfied as above, we have detected a noise only frame, and so the noise PSD can be updated as:

Suppression Rule

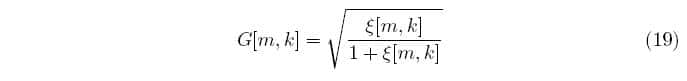

In essence, a suppression rule is a frequency bin specific gain function applied across the spectrum of the noisy speech signal in order to suppress the bins containing primarily noise while keeping speech dominated bins intact, thus resulting in an estimation of the spectrum of the clean speech signal. The clean speech signal can then be expressed as: X(ω ) = G(ξ, γ, ω ) Y (ω) where G is the suppression rule.

Spectral Subtraction Amplitude Estimator

A variety of suppression rules exist, often referred to in the literature as amplitude estimators. Some of these estimators are the Weiner suppression rule, the spectral subtraction amplitude estimator, and the Maximum Likelihood estimate [3,4]. The example algorithm using the modified spectral subtraction amplitude estimator [3] which takes into account the a priori SNR is given below:

The Algorithm

Initialization

%%==========Read the wavefile================%%

[NoisySpeech,Fs,NumBits] = wavread(filename);

NoisySpeech = NoisySpeech;

%%==========Set Parameter Values==========%%

APrioriSmoother = 0.98;

VADThreshold = 0.15;

NoisePSDSmoother = 0.98;

FrameDuration = 20;

FrameLength = FrameDuration * Fs/1000;

HammingWindow = hamming(FrameLength);

NormWindowPower = (HammingWindow’ * HammingWindow)/FrameLength;

%%==========Extract Noise===============%%

%%——first 120 ms is noise only——%%

NoiseLength = 120*(Fs/1000);

NoiseSamples = NoisySpeech(1:NoiseLength);

Noise PSD Estimation

%%==========Welch’s Method to Estimate the PSD of the Noise Signal========%%

NumSubFrames = floor(NoiseLength/(FrameLength/2))-1;

NoisePowerSpectrum = zeros(FrameLength,1);

OverlapIndex = 1;

for n = 1:NumSubFrames

RawNoise = NoiseSamples(OverlapIndex:OverlapIndex+FrameLength-1);

WindowedNoise = RawNoise.*HammingWindow;

WindowedNoiseFFT = fft(WindowedNoise,FrameLength);

NoisePowerSpectrum = NoisePowerSpectrum + …

… (abs(WindowedNoiseFFT).^2)/(FrameLength*NormWindowPower);

OverlapIndex = OverlapIndex + FrameLength/2;

end

NoisePowerSpectrum = NoisePowerSpectrum/NumSubFrames;

SNR Estimation

SpeechLength= FrameLength/ 2; % with 50% overlap

Nframes= floor( length( NoisySpeech)/ SpeechLength)- 1;

OverlapIndex = 1;

for n=1:Nframes

%%—–Estimate the Signal PSD——%%

NoisySignal = NoisySpeech(OverlapIndex:OverlapIndex + FrameLength-1);

WindowedNoisySignal = NoisySignal.*HammingWindow;

WindowedNoisySignalFFT = fft(WindowedNoisySignal,FrameLength);

WindowedNoisySignalPSD = (abs(WindowedNoisySignalFFT).^2)/(FrameLength*NormWindowPower);

%%==================SNR Estimation====================%%

%%—A-Posteriori SNR = NoisySignalPSD / ExpectedValueofNoisePSD—-%%

SNRPost = WindowedNoisySignalPSD./NoisePowerSpectrum;

SNRPostHat = SNRPost-1;

SNRPostHat(find(SNRPostHat<0))=0; %%zero out negative SNR estimates%%

%%—A-Priori SNR Estimator (4.66-4.68 Sound Capture)—%%

if(n==1) %%for the first frame, we initialize the A-Priori SNR estimator

SNRPrior = APrioriSmoother + (1-APrioriSmoother)*SNRPostHat;

else

SNRPrior = APrioriSmoother*(GainPrevious.^2).*SNRPostPrevious + …

… (1-APrioriSmoother)*SNRPostHat;

end

Voice Activity Detector

%%====================Voice Activity Detector========================%%

%%—Make the VAD Decision with the log likelihood ratio test (eq 4.46 Sound Capture)—LogLHRatio = SNRPost.*SNRPrior./(1+SNRPrior)-log(1+SNRPrior);

VADHypothesis(n) = sum(LogLHRatio)/FrameLength;

if(VADHypothesis(n)<VADThreshold)

%%Noise Only Frame (H0) Speech is absent%%

%%So update the Noise Power Spectrum Estimation%%

NoisePowerSpectrum = NoisePSDSmoother*NoisePowerSpectrum + …

… (1-NoisePSDSmoother)*WindowedNoisySignalPSD;

VAD(OverlapIndex:OverlapIndex+FrameLength-1)=0;

else

VAD(OverlapIndex:OverlapIndex+FrameLength-1)=1;

end

Suppression Rule

%%=========Suppression Rule==========%%

Gain = sqrt(SNRPrior./(1+SNRPrior));

EnhancedFrame = ifft(WindowedNoisySignalFFT.*Gain,FrameLength);

if (n==1)

EnhancedSpeech(OverlapIndex:OverlapIndex+(FrameLength/2)-1)=

EnhancedFrame(1:FrameLength/2);

else

EnhancedSpeech(OverlapIndex:OverlapIndex+(FrameLength/2)-1)=

Overlap+EnhancedFrame(1:FrameLength/2);

end

Overlap = EnhancedFrame((FrameLength/2)+1:FrameLength);

OverlapIndex = OverlapIndex + FrameLength/2;

GainPrevious = Gain;

SNRPostPrevious = SNRPost;

end

EnhancedSpeech(OverlapIndex:OverlapIndex + (FrameLength/2) – 1) = Overlap;

wavwrite(EnhancedSpeech,Fs,NumBits,outfile);

More Information

References

[1] P.D. Welch. “The use of fast Fourier transforms for the estimation of power spectra: A method based on time averaging over short modified periodograms.” IEEE Transactions on Audio and Electroacoustics, vol. 15, pp.70-73, 1967

[2] Ephraim Y., Malah D. “Speech enhancement using a minimum mean-square error log-spectral amplitude estimator.” IEEE Transactions on Acoustics, Speech, and Signal Processing, vol. ASSP-23(2), pp.443-445, 1985

[3] Scalart P. “Speech Enhancement Based On A Priori Signal To Noise Estimation.” IEEE International Conference on Acoustics Speech and Signal Processing, 1996, pp.629-632.

[4] Tashev I. Sound Capture and Processing. Chichester, UK: John Wiley and Sons Ltd, 2009

[5] Rashidinejad M., Abutalebi H., Tadaion A. “`Speech Enhancement using an Improved MMSE Estimator with Laplacian Prior” Proceedings of 5th International Symposium on Telecommunications, 2010

[6] Martin R. “Speech enhancement using MMSE short time spectral estimation with Gamma distributed speech priors,” Proc. IEEE Int. Conf. Acoustics, Speech, Signal Processing, ICASSP ’02, Orlando, Fla, USA, May 2002.

[7] Sohn, J., Kim, N., Sung W. “A statistical model based voice activity detector.” IEEE Signal Processing Letters, 1999, pp.1-3.

[8] Martin R. “Noise Power Spectral Density Estimation Based on Optimal Smoothing and Minimum Statistics.” IEEE Transactions on Speech and Audio Processing, vol. 9, no. 5, July 2001

[9] Hendriks R., Jensen J., Heusdens R. “Noise Tracking Using DFT Domain Subspace Decompositions” IEEE Transactions on Audio, Speech, and Language Processing, vol. 16, no. 3, March 2008

[10] Ephraim Y., Malah D. “Speech Enhancement Using a Minimum Mean-Square Error Short-Time Spectral Amplitude Estimator” IEEE Transactions on Acoustics, Speech, and Signal Processing, vol. ASSP-32, pp.1109-1121, Dec. 1984.

[11] P.C. Loizou Speech Enhancement: Theory and Practice. London, UK: Taylor and Francis, 2007