It is obvious the performance of noise reduction algorithms that are based on spectrum subtraction is greatly dependent on the accuracy of the noise spectrum estimation. Voice activity detection (VAD) provides the information about the presence or absence of the local speech. Noise estimation can be carried out when the local speech is silent and the frames under consideration contains noise only.

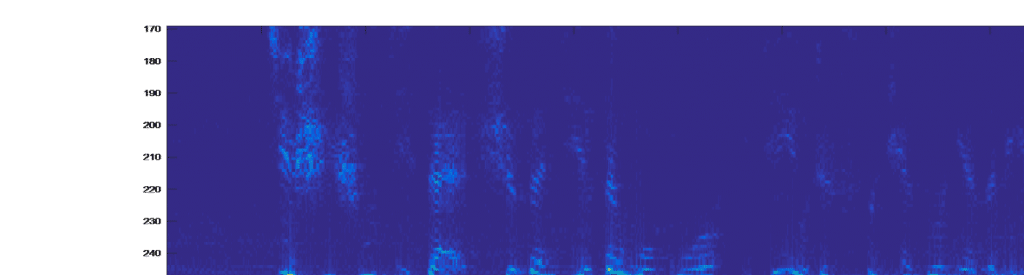

Figure 1 shows a typical noisy speech spectrum. The overall blue background represents the background noise while the light-colored areas represent the speech segments. If a VAD can exclude out the speech segments the background noise can be easily estimated by a windowed averaging. Most commonly used noise estimation algorithms perform such a practice.

The above simple procedure works well when the background noise is stationary. However in many applications, the background noise may be rather dynamic, the variance of the background noise changes dramatically from time to time. Therefore, averaging over a long period of time or multiple frames would not be a good indication of the local noise level. Tuning window length for the tradeoff between tracking the dynamics and the estimation accuracy is more an art than a science.

In addition to noise dynamics, it would also help the performance if we can continue updating the noise estimation without interruption during segments where there is speech. In some applications, constant double talk may be a norm than an exception. The capability to analyze the speech segments and obtain background noise values is critical during speech segments. We will address this issue in a separate article.