Viterbi Algorithm

The Viterbi algorithm is used in many disciplines. The algorithm is an approach to finding the most likely sequence of hidden states and the generated sequence of states is called the Viterbi path (cf. Ref.[1,2]).

Applications of the Viterbi algorithm include decoding convolutional codes in Telecommunication (specifically in CDMA, GSM, satellite and other technologies that use digital coding/decoding of signals) as well as applications in speech recognition, speech synthesis, speech enhancement and other technologies.

Current speech enhancement techniques can be categorized into two major classes: the model-free methods (and they include spectral subtraction, for example) and the model-based methods, such as hidden Markov model (HMM) based speech enhancement algorithms (cf. Ref [3]).

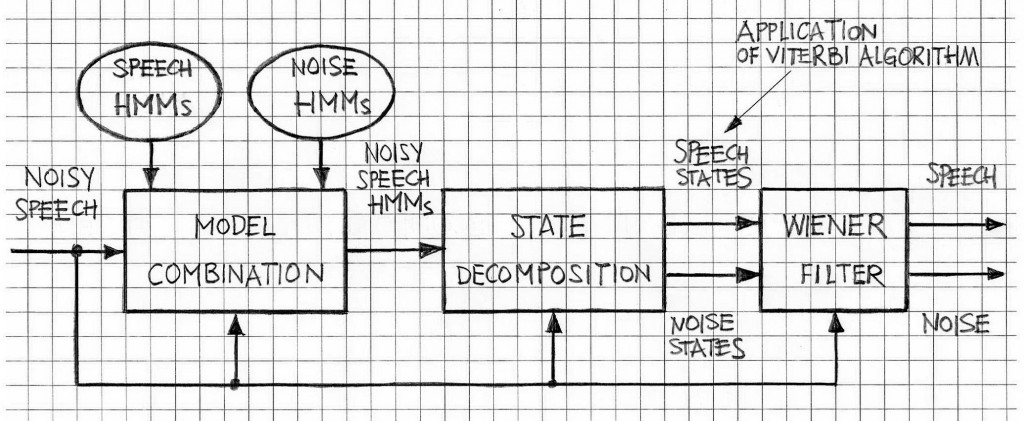

The HMM-based approach to Speech Enhancement heavily relies on the Viterbi algorithm. In such an application the Viterbi algorithm efficiently constructs the Viterbi path, i.e., the most likely sequence of an HMM, as measured using the maximum a posteriori (MAP) estimate of the underlying sequence (cf. Ref.[1,4]). Figure 1 illustrates an outline of HMM-based noisy speech enhancement and points to the stage in the process where the Viterbi algorithm is applied.

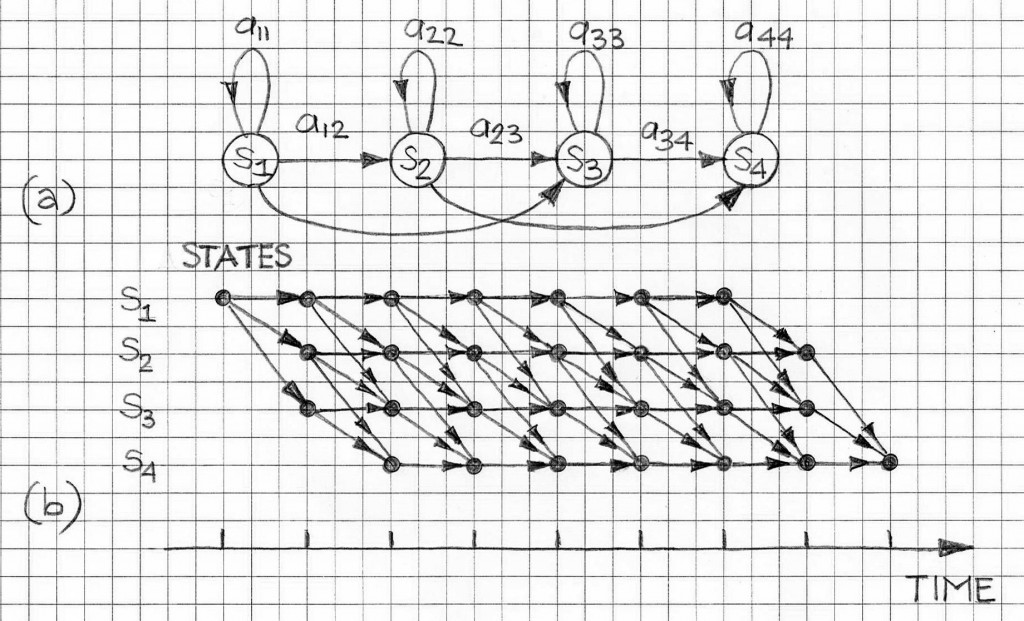

In its “pure” form the Viterbi algorithm can be considered as a “network” in which the nodes represent states at different discrete time points while branches represent transitions from previous states to the current states; the weights assigned to the branches (a.k.a. forward probability variables) that are expressed in terms of MAP estimates. Figure 2 illustrates the Viterbi algorithm concept in a form of the generic network of states and branches.

Notation used in Figure 2 is as follows:

si(t), i = 1, 2,…,N; t = 1,2,…,T; individual states at distinct times (here N = 4 and T=9);

aij, i,j = 1,2,…,N; transition probabilities.

Matrix A = { aij, i,j = 1,2,…,N } is a state transition-probability matrix.

Matrix S(t) = { si(t), i = 1, 2,…,N } is a state matrix.

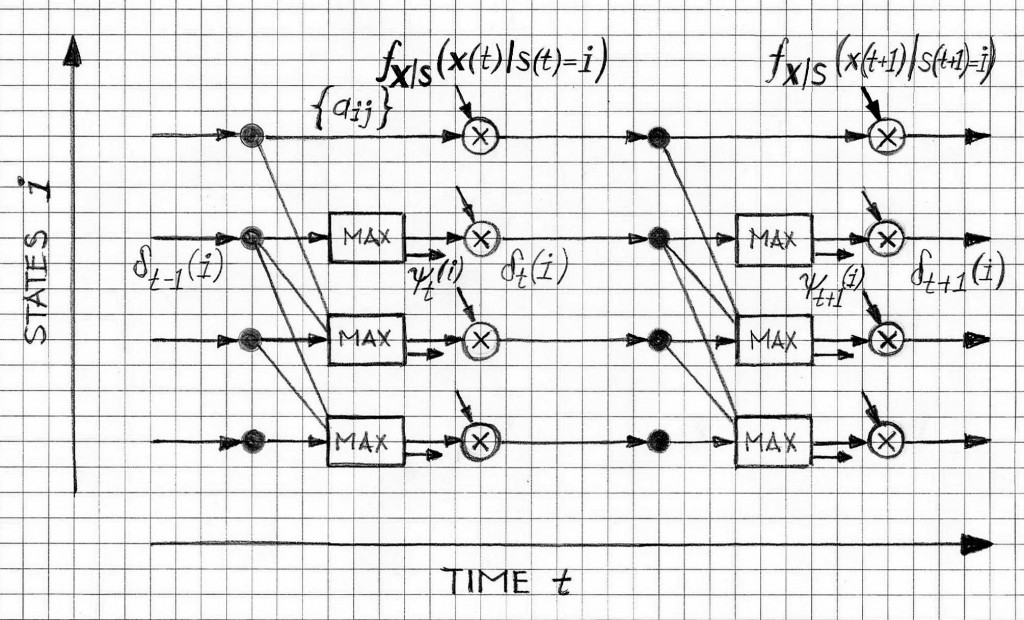

A more specific illustration of the Viterbi algorithm with selected algorithmic details is shown in Figure 3.

For an N-state HMM and an observation sequence of length T, there are NT state sequences (i.e., possible Viterbi paths). Note that even for very moderate values of N and T (for example N=4 and T=9), the total number of state sequences is already quite large (in this case, 262144) and in practical cases an exhaustive search of the state-time trellis for the best state sequence is computationally prohibitive. The Viterbi algorithm is key to making computation of the most likely state sequence of an HMM practical and easy to implement. While Figure 3 sheds some light on the Viterbi algorithm, below are specific steps illustrating its relative simplicity.

VITERBI ALGORITHM – OUTLINE

Variable δt(i) records the cumulative probability of the best path to state i and time t;

Variable ψt(i) records the best state sequence to state i and time t;

Step 1: Initialization of variables δt=0(i) and ψt=0 (i);

Step 2: Recursive calculation of the ML (maximum likelihood) state sequences and their probabilities as per the following pseudo-code:

For time t=1,2,…,T-1

For states I = 1,2,…,N

Compute

δt(i) = max[δt-1(j). aji ] . fi(x(t)); max(.) performed over all j;

ψt(i) = arg{max[δt-1(j) . aji]}; max(.) performed over all j;

End states

End time

Step 3: Termination: retrieve the most likely final state as per the following

sMAP(T-1) = arg{max[δT-1(i)]}; max(.) performed over all i;

Probmax – max[δT-1(i)] ; max(.) performed over all i;

Step 4: Backtracking: retrieval of the most likely state sequences (i.e., the Viterbi path) of the HMM.

For time t=1,2,…,T-1

sMAP(t) = ψt+1[sMAP(t+1)];

End time

– Notation used in Steps 1 through 4 is as follows:

– x(t) is the observation signal sequence at time t;

– sMAP(t) is the MAP state sequence of the HMM at time t;

– fi[x(t)] = fX|S(x(t)|s(t) = i); fi(.) are elements of the signal space model with noise mixtures that include Gaussian probability density functions, for the given data set (cf. Figure 1);

– X is an observation sequence as below:

X = [x(0), x(1),…, x(T-1)];

The most likely state sequence (the Viterbi path) of the model under consideration can be used as the probability score for the model and the observation X. For example, in speech recognition or in speech enhancement for that matter, for each candidate word or phoneme, the probability of the observation and the most likely state sequence is calculated and then the observation is tagged with the word, of phoneme, that achieves the highest probability score.

In retrospect, the Viterbi algorithm, apart from being a tremendously useful approach to finding the most likely sequence of hidden states with so essential applications in Telecommunication, speech recognition, modern model-based speech enhancement and elsewhere, it can be merely valued as a means to mitigate the overwhelming burden of computations when choosing the most likely sequence. However, the reality is that in typical situations with the number of states N being greater than 100 and the observation sequence length T being greater than 1000, the direct approach just cannot realistically be pursued as the total processing power of all existing CPU in the world is not sufficient to deal with such a task. With that in mind, a recursive approach makes the outlined task implementable and the Viterbi algorithm is a very efficient example of recursion with so many different and important applications.

VOCAL’s Voice Enhancement solutions include noise reduction software solutions that have been tested in typical acoustic environment. These solutions can be modified to fit custom specification and they can be used in conjunction with speech-model-based solutions, including HMM-based approaches, as required.

REFERENCES

- Hidden Markov Models (Section 5), in Advanced Digital Signal Processing and Noise Reduction, by Vaseghi, S V., A John Wiley and Sons, Ltd. 2001

- The Viterbi Algorithm, G. David Froney, Proc. Of the IEEE, No3, Mach 1973; p.268-278.

- Speech Enhancement Using Voice Source Models, by Yasmin, University of Waterloo, 1999.