Fixed point processors are generally smaller and consume less power than the familiar general purpose floating point processor does. In an application like acoustic echo control in mobile, the size and power of the DSP are crucial to providing long lasting service in a tiny enclosure. For the most common operations like addition and multiplication, fixed point offers a critical speed advantage, which is again ideal for any type of real time echo cancellation. Basically, using fixed point mathematics offers a unique opportunity to optimize the performance of your DSP.

Definition: A Fixed Point Number X(a, b) is an N-bit binary representation of q ϵ ℚ, such that q = a/b where b = 2n and n ϵ ℤ+

In other words, fixed point binary numbers are meant to represent a subset of the rational numbers. Take this in contrast with the natural binary numbers that everyone knows. As you have seen before, the natural binary numbers are binary representations of ℤ+ the non-negative integers. Just like with binary integers, fixed point numbers have unsigned, signed, and two’s complement representations. To get more into detail about what exactly fixed point numbers are, we will consider a general unsigned fixed point representation.

Fixed Point Representation

Consider an N-bit binary number. As an unsigned fixed point number, we will represent it as U(a, b) following [1]. The U(a, b) representation explicitly illustrates that our number has a integer bits and b fractional bits. Since each bit uk ϵ {0, 1} has weight 2k, U(a, b) can be represented as:

While this understanding is certainly instructive, your fixed point microprocessor does not understand the idea of the “decimal” point in (1) and so cannot give you a weight of 2-k. So instead, we represent U(a, b) as:

We can use (2) to represent integer binary numbers in fixed point format. Signed format is represented as S(a, b) and requires the addition of a sign bit as usual.

Converting to Fixed Point

Now that we understand the fixed point representation, we are going to consider how we can convert ‘real’ numbers to fixed point ones as in [2]. Real is in quotes because you will almost surely not be able to represent an arbitrary real number in a computer without some loss of precision. This is due to density of ℝ versus the finite storage space available in any computing machine. Forgetting about this technicality, we will start by considering that to represent any number in fixed point, we need to divide it into its integer and fractional parts.

Unsigned Integer Conversion

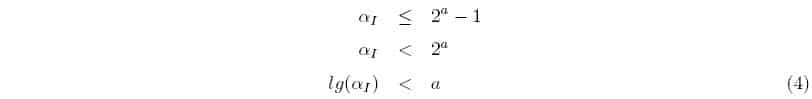

We will start by considering how to convert the integer part of our number when it is unsigned. Consider an arbitrary real number α that we want to represent as U(a, b). The integer part αI can take values between:

Solving for a, we get:

Where lg(•) is a widely used notation for the base 2 logarithm. We can ensure we pick an a ϵ ℤ that will always satisfy the solution by computing:

Where ⌊•⌋ represents the integer floor of αI. To floor a number means to round it down to the nearest integer.

Signed Integer Conversion

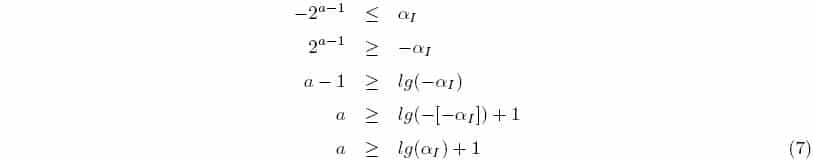

Similarly for a signed integer representation S(a, b), the integer part αI can take values between:

When αI is negative, we solve for the left inequality:

Where we have explicitly used the fact that αI < 0. Solving the right inequality by analogy to (6) shows us that when αI > 0 we have a > lg(αI) + 1. Looking at these two expressions, we see that one side of the inequality is stricter than the other. Considering this fact, by analogy to (7) we conclude:

Where αImin and αImax are the most negative and most positive numbers αI may represent.

Fractional Part Conversion

To start understanding how to represent the fractional part αF of our number α, we need the following:

Definition: The resolution of a fixed point number is the smallest magnitude representable, given by:

Similarly to the previous discussion, the number of fractional bits needed for a particular resolution is then:

Where ⌈•⌉ represents the ceiling operator, which takes a number and rounds up to the nearest integer.

Conversion Formula

Given our number α, the fixed point base-10 integer representation is given by:

Notice that all together, we have an 18 bit number, which is not a standard register size. The next largest standard register size is 32 bits, so in fact, we could increase our resolution. Often, designers are faced with a predetermined register size and given a range of inputs they will need to represent. To ensure all of the incoming values can be represented, they will first determine the required number of integer bits. The remaining bits in the register will then be used for the fractional part, and the resolution that results is the resolution of the system.

Fixed Point Operations

We will first consider the results of fixed point operations on the binary representations of fixed point numbers [1,3].

Addition: Z = X(a,b) + Y(c,d) requires:

Subtraction is similar, and can be done using two’s complement addition. Thus to perform addition or subtraction, we need to do some shifting if the formats of X and Y are not the same. To do this efficiently, we will implement a virtual shift. To best understand what a virtual shift does, which requires that we consider yet another representation of a fixed point number X(a,b):

That is, we can separate our fixed point number into its natural binary representation αfx2 and a binary point shifting operation 2-b. Notice that this is just the reverse operation of (15). Now we can define the virtual shift:

Definition: A virtual shift is a reinterpretation of the scaling of X(a,b) that has infinite precision and no overflow. A virtual shift from X(a,b) to X(c,d) is performed via:

Multiplication:

Which is performed as:

Division is usually a complex and expensive operation. To get around this difficulty, division is often formulated as a series of (inverse) products. Thus, division can be handled in the above manner.

An Example

Compute α•β+к in fixed point when α = 1:50 , β = -1:25 , and к = π given a maximum register size of 8 bits.

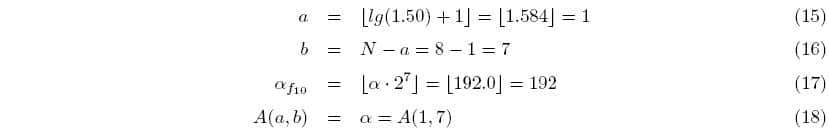

First, we must find the fixed point representations of each number. Notice that к is an irrational number, thus there is no way its full representation is going to fit in any realizable register, let alone an 8 bit one, regardless of format and so we know that we are going to lose precision. To illustrate the process, we will calculate αfx.

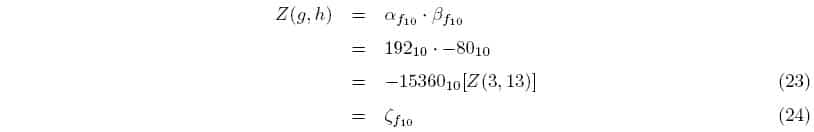

Let A(a, b) = α, B(c, d) = β, K(e, f) = к and X(x, y) be the result. Now we have:

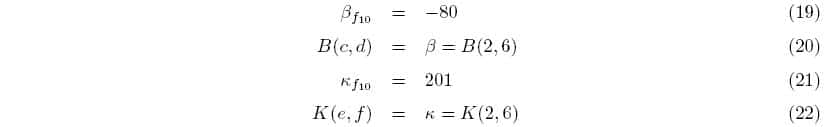

Similarly, we have:

Now we perform the computation in the order of operations. Starting with the multiplication, we obtain:

Here, Z is given in 16 bit format, so we need to scale it down to something an 8 bit microprocessor can handle using the virtual shifting operation. Since we are going to have to add it to к and addition requires the same format, lets shift our result to K(2,6):

Now we can perform the addition:

Converting to binary and then out of fixed point, we have:

Thus our error is:

Stability in Fixed Point Filtering

In developing any filter, you either have a choice of an FIR or IIR scheme. Since FIR filters have no poles, FIR filters will always be stable, but this is not the case for IIR filters. This is because quantization causes poles to shift along a grid, and there exists the possibility of overflow. Here we will focus arithmetic issues in fixed point IIR filters.

Limit Cycles

A fixed point IIR filter enters a limit cycle when its output oscillates between –R,R where R is the range of the format. Limit cycles can only happen in IIR filters because of their recursive nature. A limit cycle can be entered due to quantization or due to register overflow of an intermediate result. These two types of limit cycles are referred to in [4] as multiplier roundoff limit cycle and adder overflow limit cycle respectively. For an example, please refer to [4].

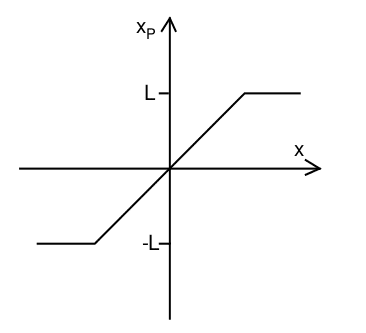

To ensure a limit cycle does not happen, we need to add stability to our IIR filter. Often, designers scale all input values between the ranges of [-1, 1] so that the coefficients of any filter can also be within that range therefore adding stability. Another way is to pair the quantization format with an overflow scheme. An overflow scheme tells the system what to do in the event of any range overflow. One of the common overflow schemes is called saturation and is depicted below:

Figure 1: Saturation Overflow Scheme [5]

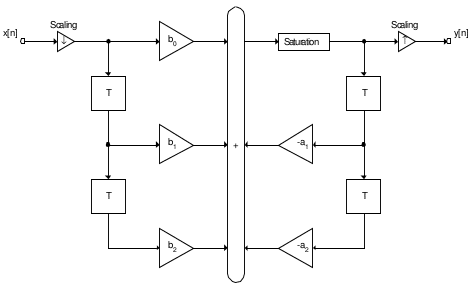

Generally, higher order IIR filters can be made more stable by building them from cascading lower order blocks. One such block that will prevent limit cycles is given in [5]. This example first down scales the input, saturates the result if out of range, and then transforms the input back to its previous scale. It is presented below: Figure 2: Limit Cycle Stable IIR [5]

Figure 2: Limit Cycle Stable IIR [5]

Another way to prevent limit cycles is to appropriately choose your fixed point format such that guard bits exist. In the event that you are not sure what format to use but have access to sample data, [6] provides a simple method to determine the optimal word length. Given a fixed register size, the idea is to perform a type of Monte Carlo simulation across all possible formats given a test suite of random data vectors. An acceptable format is one that incurs no overflow during arithmetic or quantization and division by zero never occurs. The best format in the set of acceptable formats is the one with the smallest mean quantization error.

References

[1] R. Yates, “Fixed-point Arithmetic: An Introduction.” Digital Signal Labs, August 2007

[2] E. L. Oberstar, “Fixed-point representation and fractional Math.” Oberstar Consulting, August

2007

[3] S.Rein, M. Reisslein, “Low-Memory Wavelet Transforms for Wireless Sensor Networks: A Tutorial”, IEEE Communications Surveys and Tutorials, vol. 13, no. 2, 2011

[4] B.W. Bomar, “Finite Worldlength Effects”, Digital Signal Processing Handbook, Boca Raton: CRC Press LLC, 1999

[5] M. Sarkar, “A Floating-Point to Fixed-Point Conversion Methodology for Audio Algorithms”, Technical Report, Delphi, India, 2004

[6] V. Rodellar, “Optimal Arithmetic Dimension of Adaptive Filters for Speech Characterization”, MELECON 96, Madrid, Spain, 1996