Modern communications have quickly shifted towards the increased use of hands-free, speakerphone-like systems, such as videoconferencing systems, laptops and tablets. In these systems the speaker is often located in an enclosed room and a relatively large distance away from the microphone. This configuration results in acoustic signal processing challenges that are not present in handset/headset telecommunications where the increased distance between the speaker and the microphone greatly reduces the signal-to-noise ratio (SNR).

Reverberant Environments

Environmental noise sources include ambient noise, interferes, and reverberations of the desired speaker. Acoustic signal processing algorithms provide spatial processing and adaptive beamforming solutions designed to reduce the effect of interferes (e.g. other non-desired speakers) and dereverberation solutions designed to reduce the effect of the reverberations (self-reflections). There are advantages and disadvantages to the different ways these beamforming and dereverberation algorithms can be combined.

When choosing an adaptive beamforming algorithm in reverberant environments, there are several issues to consider. One issue with many beamforming algorithms is that they rely on the statistical independence of the desired signal and the interfering signals from other directions. Reverberation is a convolution of the desired speaker, so the desired speaker is not statistically independent which results in the poor cancellation of interferes and partial cancellation of the desired speaker. Considering this issue, a fixed beamformer, such as a delay-and-sum or a filter-and-sum beamformer, proves to be more suitable than an adaptive beamformer in reverberant environments.

Adaptive Beamforming and Dereverberation

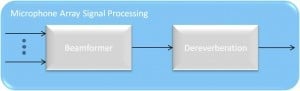

There are three basic configurations of a spatial processor and dereverberation. The first method is to perform adaptive beamforming first followed by dereverberation as a post-process on the beamforming output, as illustrated by Figure 1.

Figure 1: Method 1 – Microphone array signal processed by adaptive beamforming followed by dereverberation.

Dereverberation and Adaptive Beamforming

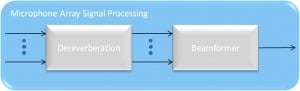

The second method is the reverse of first method, where the dereverberation is performed first on each channel independently and the resulting outputs are inputs to the adaptive beamformer, as illustrated in Figure 2.

Figure 2: Method 2 – Microphone array signal processed by dereverberation followed by adaptivebeamforming.

Combined Adaptive Beamforming and Dereverberation

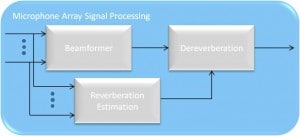

The third possible configuration, illustrated in Figure 3, is to jointly combine the spatial processor and dereverberation such that statistics are collected separately on each channel and then fed to a post-process whose input is also the output of the adaptive beamformer.

Figure 3: Method 3 – Microphone array signal processing using adaptive beamforming with reverberation estimation.

The advantage of Method 1 is it has the least computational complexity of the three methods. The disadvantage of this method is it can introduce correlated late reflections that would cause estimation errors in the speech dereverberation algorithms. As a result, this can produce undesirable speech distortions.

On the other hand, Method 2 would not have the correlation issue that method 1 would have, but obviously would have the most computational complexity. However the last configuration, Method 3, combines the best of both worlds. Since the estimation of reverberation statistics is done separately from beamforming, the correlation issue is avoided. In addition, the computational complexity would be less than method 2 since the entire dereverberation process does not have to be performed on each channel.