Beamforming techniques try to steer the beam response in the desired direction with a fixed response. That is, the beamshape is kept as invariant as possible, and it is only the direction at which this shape points that changes [1].

Classic Beamforming

For arrays with a limited angle resolution, steering the beam will reduce the aperture and thus cause distortions in the beam shape. It is then up to an appropriately chosen weighting vector to make up the difference. Let the desired response be ![]() . Then we attempt to

. Then we attempt to

Where Ad is the microphone array manifold, and ![]() is the weighting vector. When AAH is non-singular, we can use the psuedo-inverse to calculate the optimal weights:

is the weighting vector. When AAH is non-singular, we can use the psuedo-inverse to calculate the optimal weights:

The condition number of A is an important point. When the condition number is large, it means that we have not sampled the potential steering directions finely enough. In this case, it will cause ![]() to be large, and thus the noise gain

to be large, and thus the noise gain ![]() to be large. This will severely lower the SNR causing inaccurate statistics to be collected.

to be large. This will severely lower the SNR causing inaccurate statistics to be collected.

We can correct this problem by using fixed beamforming techniques like beamscanning, which compute beamshapes for every five degrees of arrival or so, and simply switch between the beam shapes based on calculated DOA. Obviously this method is suboptimal, but it has low computational overhead, and combined with frequency invariant designs, the error is mostly dependent on the accuracy of your online sound source localizer. A more advanced method is to use statistically optimal beamforming, which attempts to create the weights based on data statistics.

Statistically Optimal Beamforming

Many modern beamforming methods fall under the category of statistically optimal beamforming. There are many definitions of optimality, and many statistics to be chosen to optimize. One of the most successful and hence most used in the Linearly Constrained Minimum Variance Beamformer. In this optimization scheme, the complex weights are chosen such that the desired signals are passed with specific gain and specific phase. In other words, the weights minimize output power variance subject to a linear constraint that defines the desired response [1]. The problem then becomes

Using the Lagrange dual and relaxing the constraints, we can solve for the optimal weight vector directly which becomes:

When interference is at a known position (ϴi,фi) then the constraint forms a vector and becomes:

Which illustrates how we can use the optimization procedure to place nulls in desired directions, aka, Nullsteering. Specifically, we want to create nth order nulls in N specific directions. To start this procedure [2], we begin by creating the 0th order null. This corresponds to the second constraint above. We then create the 0th order constraint matrix as:

In general, the nth order null is found by:

Where ![]() is the wave vector and A(

is the wave vector and A(![]() ) denotes the array response. We place these in the matrix Cn as above:

) denotes the array response. We place these in the matrix Cn as above:

Then the total constraint matrix becomes:

Now the constraint is simply ![]() C = 0. The next step is to modify the weights we have already chosen for a desired response. For instance, we first design the weights according to MV DR and call that vector

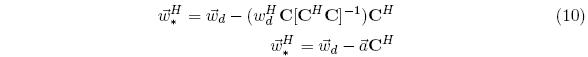

C = 0. The next step is to modify the weights we have already chosen for a desired response. For instance, we first design the weights according to MV DR and call that vector ![]() . We need to modify this vector to ensure nulls in the pre-determined directions. As [2] shows, the optimal weighting with introduced nulls is then:

. We need to modify this vector to ensure nulls in the pre-determined directions. As [2] shows, the optimal weighting with introduced nulls is then:

Instead of nullsteering, interference can also be minimized by designing the weights such that they minimize the supergain of the array [3]. In this case, the optimal weighting is:

Instead of using such complexity, it may be simpler to constrain the white noise gain altogether while maximizing the array gain:

Or simply minimize the output power, while constraining the white noise gain and the signal response:

Where δ is a small real number. Often it is useful to transform the matrices into block matrices for simpler implementation and block updating. This results in significant computational savings in the long run despite the initial overhead of the transformation, and the partial application of the updating schemes discussed next.

Adaptive Beamforming Techniques

Unfortunately the second order statistics present in the equations above are generally not known. In other words, we need to be able to approximate them well. The two ways to approximate these statistics are block or frame adaptation or continuous adaptation. Frame adaptation works by adjusting the weights on a per frame basis, while the continuous approach does so as each sample is read in. In speech applications, small frame sizes of 20 – 40ms are generally preferred as we can be confident that the statistics are stationary throughout the frame. The block adaptation technique can be done using standard least-mean-squares (LMS) algorithmic techniques [1,2]:

Where ![]() is the error between the actual and desired response. Of course, this algorithm’s stability and convergence rate can be improved by normalization, resulting in the well-known normalized least mean squares update equation:

is the error between the actual and desired response. Of course, this algorithm’s stability and convergence rate can be improved by normalization, resulting in the well-known normalized least mean squares update equation:

Recently the block-based normalized least mean square algorithm has been proposed [4]. In this scheme, the maximum value of a block is used as the normalization. The update equation is:

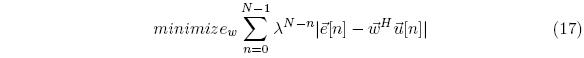

Where ![]() . As the authors show, this algorithm is more stable and converges faster than standard NLMS. In addition, its authors show it performs well in adaptive beamsteering in a multi-path environment. In addition to least mean square type algorithms, the recursive least squares procedure can also be used, and its objective function is defined as:

. As the authors show, this algorithm is more stable and converges faster than standard NLMS. In addition, its authors show it performs well in adaptive beamsteering in a multi-path environment. In addition to least mean square type algorithms, the recursive least squares procedure can also be used, and its objective function is defined as:

This algorithm is often faster than the lms equivalent because it doesn’t depend on the dispersion of the autocorrelation eigenvalues. In fact, most of the update schemes applicable to echo cancellation will be applicable for beamforming weight adjustment. The problem with these methods is their instability in the presence of interference such as multipath, echo, or low SNR [2]. In addition, these methods merely approximate the optimal weights discussed in the previous section, which themselves are derived from unrealistic assumptions, and therefore there is error inherent.

Despite these obvious drawbacks, there are some things we can do to increase their stability and accuracy. First, we can integrate single channel noise, echo cancellation, and dereverberation techniques into the beamformer as either a pre- or post-process. Second, we can introduce dithering to force constant tracking and avoid getting stuck in local minima. Third, we can explicitly incorporate models of the interference into the weight derivation as detailed in [2]. In combination, optimal adaptive beamforming is realized.

There are a multitude of ways to optimally adapt a beamformer. This page only considers are few. Not discussed here, but of equal importance are the suboptimal methods such as direct beampattern design. By carefully designing a good beampattern, such a suboptimal method may perform just as well and in some cases better than optimal methods in a real environment, where the statistics of the interference are not as simple as assumed previously.

More Information

References

[1] V. Madisetti, D. B. Williams, The Digital Signal Processing Handbook. 1st ed. Salem, MA: CRC Press LLC., 1998

[2] Van Trees, Harry L, Optimum Array Processing – Part IV, Detection, Estimation, and Modulation Theory, 1st ed. Hoboken, NJ: John Wiley & Sons., 2002

[3] H. Cox et al, “Robust Adaptive Beamforming”, IEEE Transactions on Acoustics, Speech and Signal Processing, vol. 35, no. 10, Oct 1987

[4] Z. Rahman et al “A low complex adaptive algorithm for antenna beam steering.” Signal Processing, Communication, Computing and Networking Technologies (ICSCCN), 2011 International Conference on. July 2011.