The application of Deep Neural Networks (DNN) for single channel speech enhancement has been well documented and researched. One of the main challenges of these solutions is the ability to remove strong interfering speech sources. Personalized DNN Speech Enhancement is one path to a solution, but requires training models on a particular user’s voice. It is not a generalized solution. The utilization of microphone arrays and multichannel audio allows a speech enhancement system to capture the spatial information from an acoustic scene. Acoustic beamformers, such as MVDR or the Generalized Sidelobe Canceller are able to spatially filters sounds, helping to overcome the competing talker problem.

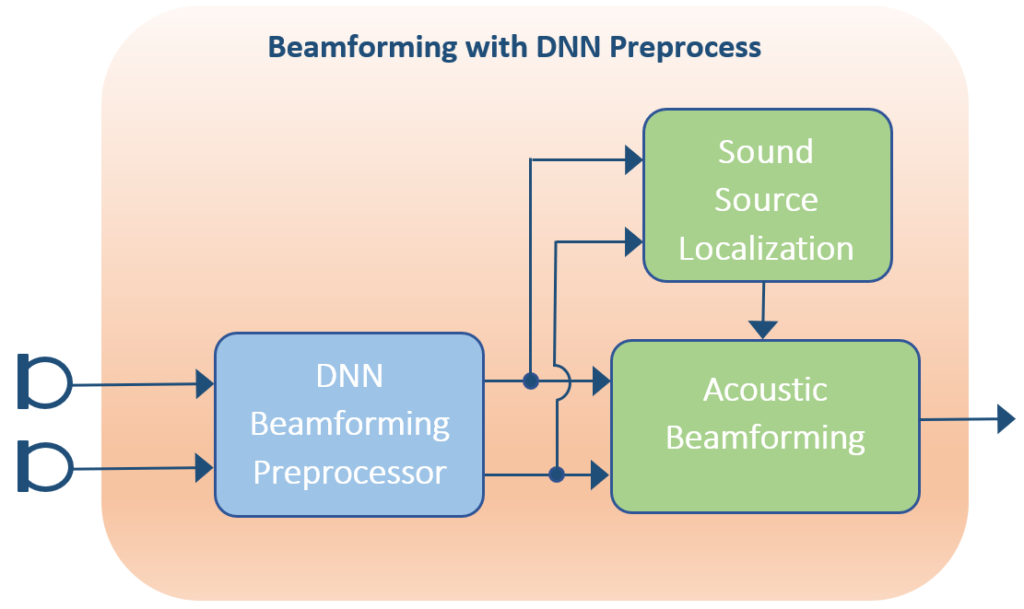

How can one utilize DNNs in a multichannel system with beamforming? One approach is to preprocess the microphone array data with DNN speech enhancement. The multichannel input contains the desired signal, undesired interfering signal, and uncorrelated diffuse noise. This uncorrelated diffuse noise degrades the performance of the beamformers. Beamformers rely on accurate direction of arrival information to help steer the beam in the desired direction. In addition, for MVDR beamformers, if the spatial covariance matrix contains uncorrelated noise, then the beamforming weights for generating nulls in the direction on interfering sounds will be sub optimal. Therefore, if a DNN algorithm is able to remove the uncorrelated noise from each channel while preserving the spatial information, then the beamforming system will be able achieve greater SNR improvements.