Psychoacoustics defines the study of the relationship between acoustic stimuli and the listener’s perceived sensation from that auditory event. The use of psychoacoustic models has expanded to subjects beyond psychological and physiological acoustics. Here, we will discuss the use of such models in audio coding, mainly perceptual audio coding.

The psychoacoustic models are used to mask any distortion caused by the quantization process. Rather than using metrics such as signal-to-noise ratio or mean-squared error, the effect of quantization is evaluated using psychoacoustic criteria, notably masking thresholds and spreading functions.

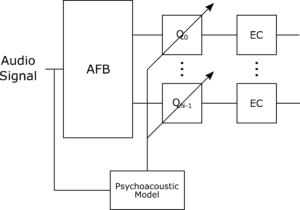

Perceptual audio coding uses a relatively simple psychoacoustic model to perceptually quantize a given signal. An audio buffer is windowed and filtered using the analysis filter bank (AFB) into subbands. The bank type and the number of bands are dependent on the type of audio codec used and the statistical properties of the signal. The filter bank models the human auditory system as a set of overlapping bandpass filters. A prominent model is the Bark frequency scale, which aligns with the critical bands of the auditory system. The filters are denser in the lower frequency range (more sensitivity) and expand in the higher frequency range (less sensitivity), corresponding to the nonlinear frequency response of the human ear.

After the spectral components have been separated, subband masking thresholds are calculated utilizing tonality metrics. This metric is a crucial step. It is well-known that a non-tonal signal is a stronger masker than a tonal signal; therefore, the spectral modulation of the subband signal is used to calculate the masking thresholds of the noise and tonal components. The computed thresholds are compared to the human threshold in quiet to examine the importance of the signal. The masking thresholds of each subband are superimposed together, allowing for the codec to allocate bits for the quantization process.