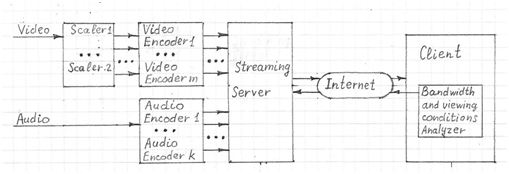

User Adaptive Video Streaming leverages knowledge about the user’s viewing conditions (similar to conventional broadcasting) or how the user will interact with the content. In most cases, video streaming transmissions do not exploit this information.

User-adaptive video streaming provides feedback to the server that changes the video stream; customizing it to the client viewing conditions (screen resolution, lighting conditions, distance between client and the screen, etc.). The Streaming Server selects a particular video resolution and encoding parameters that works best for these viewing conditions. The datastream bitrate is also changed appropriately. As a result, this technology improves QoS (quality of experience) by optimizing the trade-off between bandwidth and perceptual representation of the content.

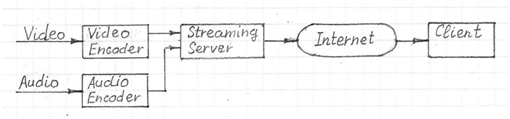

The video stream is compressed using a video codec such as H.264, VP8, VP9 or H.265. The audio stream is compressed using an audio codec such as MP3, Vorbis or AAC. Encoded audio and video streams are assembled in a container bitstream such as MP4, FLV, WebM, ASF or ISMA.

Newer technologies such as HLS (HTTP Live Streaming), Microsoft’s Smooth Streaming, Adobe’s HDS (HTTP Dynamic Streaming) and MPEG-DASH (Dynamic Adaptive Streaming over HTTP) support adaptive bitrate streaming over HTTP. They are alternatives to using proprietary transport protocols.