VOCAL’s speech coder software includes a complete range of speech compression algorithms optimized for execution on ANSI C and leading DSP architectures. For example, in VOCAL’s implementation of the G.729-A speech codec; the standard feature set includes model-based PLC functionality. The jitter buffer functionality is a part of VOCAL’s overall solution for VoIP. If required customization of specific components such as PLC or jitter buffer can be included. Contact us to discuss your speech application with our engineering staff.

IP network packet delivery is principally based on the best-effort and thus, depending on the network conditions as well as amount of traffic and network congestion, packets may arrive at the destination late, they may arrive out of order, or they may get lost. Unlike in the case of packets carrying data that is whose delivery is not time-critical, delivery of packets carrying segments of speech is time sensitive if not critical and correction of packet delivery (such as resending delayed or lost packets) cannot be easily done.

How Do You Compensate for Network Jitter?

One of the key factors which has an impact on the overall voice quality in VoIP networks is the effectiveness of compensating for network jitter. This is principally done by using a jitter buffer which should be realized such that it adapts to the packet transmission characteristic observed in a given transmission link.

The depth of the jitter buffer should be adapted to the ongoing packet transmission characteristics. If a high network jitter is observed, the size of the jitter buffer should be long enough to compensate for the network jitter and packets arriving in the wrong order. If a low network jitter is observed the employed jitter buffer should be shorter to minimize the end-to-end signal delay.

The presence of a jitter buffer always increases the end-to-end network delay. The network delay is particularly adverse in full duplex communication. For example, 150 ms delay in one direction (which implies, in a symmetric configuration, 300 ms round-trip delay) already approaches the lower limit of objectionable network latency and it may impede the interactivity of conversations. The ITU-TG.114 recommends that the upper limit of end-to-end delay be 400 ms (cf. Ref.[1]; for E-model aspects also cf. Ref. [2]). This is why, when applying jitter buffering it is important to do the following:

- Detect the status of the current transmission link based on the observation of the number of samples in the jitter buffer;

- Develop strategies to reorder arriving packets and to adapt the depth of the jitter buffer; without this audible artifacts occur;

- Realize an algorithm controlling the depth of the jitter buffer to avoid buffer under/overflow conditions (thus to achieve high jitter robustness) as well as to avoid unnecessarily long end-to-end delay;

- Develop/implement a suitable playout buffer algorithm that minimizes audible artifacts.

Essentially, aspects 1-4 provide high-level guidance for developing an adaptive buffering. The degree of adaptation, its complexity and reliability have an impact on the computation cost. Therefore, the choice between reliability, minimum buffer depth and quality of service versus the computation cost will results in some functional compromises. The least computationally intensive approach is by realizing a static buffer. However, in such a case typically the depth of the buffer is chosen greater (or much greater) than it is really needed in the given circumstances. That ‘safe’ compromise detrimentally affects the quality of service as the round trip delay becomes unnecessarily long. On the other hand, if the buffer is too small, e.g., smaller than required to accommodate network delay jitter, the packets with large latency are discarded at the destination as if they never arrived. This case manifests as a buffer underflow. The considerations discussed so far lead us to the idea of adaptive jitter buffering and mechanics of buffer audio data playout (cf. Refs. 3, 4]).

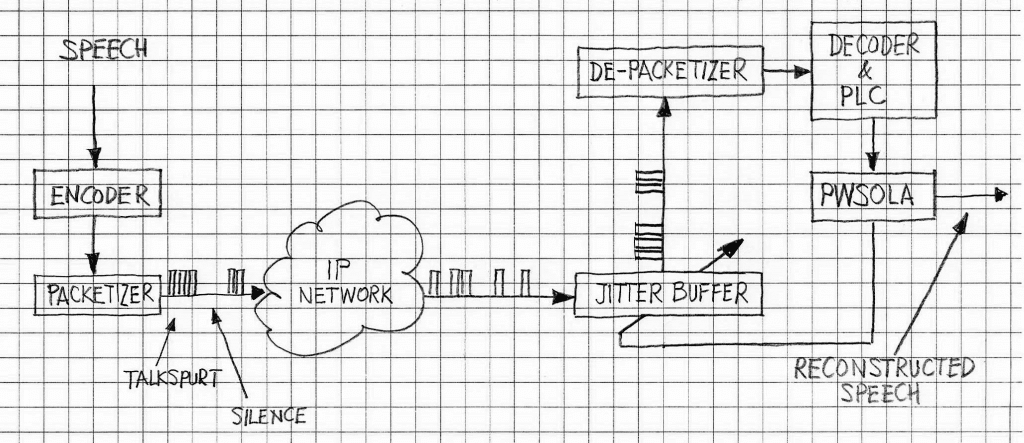

Figure 1 illustrates a VoIP system with an adaptive buffer, as seen from the end-to-end perspective. The key element is the PWSOLA box (Packet-based Waveform Similarity Overlap-Add) which controls the adaptive buffer operation.

Different approaches to playout algorithms have been studied in literature. They can be classified into four categories:

- algorithms that establish the playout delay based on a continuous estimation of the network parameters;

- statistics based algorithms;

- algorithms that maximize the user satisfaction ;

- algorithms that use various heuristics and monitor certain parameters (e.g. late packets fraction, buffer occupancy, etc).

One of the most well known algorithms pertaining to the first category uses an autoregressive estimate method to estimate the average network delay and its variance. The estimation is done via the first-order IIR filtering (or via the first-order ARMA model, as is often referred to in Applied Statistics), with the input being one-way packet delay (as defined by RFC 2679, Ref.[5]) and the outputs whose estimates are sought are average network delay and its variance. Based on estimated values of short-term average network delay and its variance, the individual playout times for packets temporarily stored in the jitter buffer are computed.

The values of short-term average network delay and its variance are estimated for each packet received, but they are used to calculate the playout time only for the first packet in a talkspurt. Consequently, if the given talkspurt is exceptionally long, some errors/audible artifacts may occur. (Note that during silence periods between talkspurts an application may send occasional comfort noise packets or may not send packets at all. The first packet of a talkspurt is considered as the first packet following a silence period. The topic of talkspurt and talkspurt identification is discussed more extensively in RFC 3551, Ref. [6])

Work on alternate solutions for adaptive jitter/playout buffering for VoIP remains an active field of applied research in voice communication. A particularly good overview of that is given in Ref. [7].

What is Clock Skew?

In addition to network jitter, there is another aspect of packet based transmission that a jitter buffer has to deal with – clock skew. Clock skew is the slow constant drift of the clock sources on either end, relative to each other, due to the imperfections of clock sources. Even with highly accurate clock sources – if they are not truly based on the same source, they will drift. And thus the algorithm determining the playout of packets on one end, will cause either the shortening or lengthening of the jitter buffer without being aware of it. In other words, the timestamps in the packets are actually closer together in time on one end then the other. The jitter buffer mechanism must be able to compensate for this clock skew or the drift will eventually cause a disruption in the stream of data, or unacceptable latency.

References

- ITU-T, Recommendation G.114: One-way Transmission Time., May 2003

- ITU-T, G107 Recommendation, “The E-model, a computational model for use in transmission planning”

- Reducing VoIP quality degradation when network conditions are unstable, Craciun, D. and Raileanu, A., Embedded Comm, 2010

- Adaptive Jitter Buffer, Institute for Communication Systems and Data Processing, RWTH Aachen University

- RFC 2679: A One-way Delay Metric for IPPM.

- RFC 3551: RTP Profile for Audio and Video Conferences with Minimal Control.

- Playout Buffering for Conversational Voice over IP, Gong, Q; McGill Univ. 2012.

VOCAL’s solution is available for the above platforms. Please contact us for specific supported platforms.