Hidden Markov models (HMMs) are statistical-based models which can be used to prototype the behavior of non-stationary signal processes such as time-varying image sequences, music signals, and speech signals. Non-stationary characteristics of speech signals include two main categories: variations in spectral composition and variations in articulation rate. An HMM considers the probabilities associated with state observations and state transitions to model these variations.

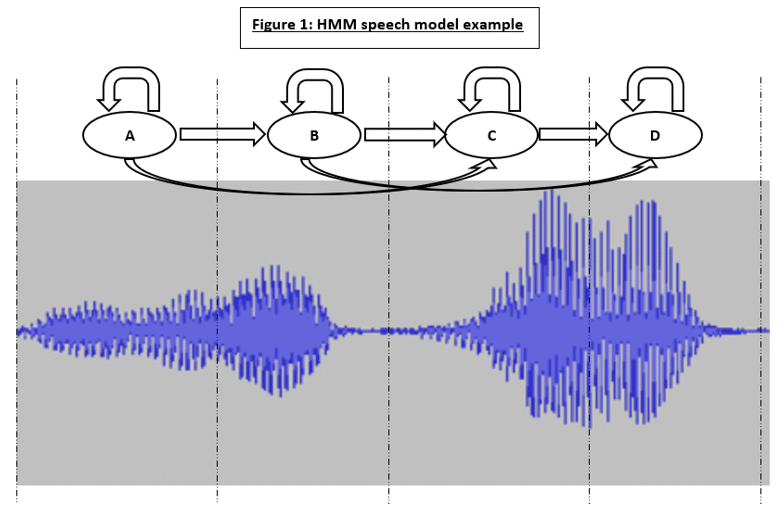

Consider the speech signal as shown in Figure 1 on the following page. The respective segments of the given signal can be modeled by a Markovian state. Each state must consider the random variations that can occur in the different realizations of the signal by having probabilistic events which represent the state observation probabilities. The transition probabilities between each state provide a connection between the HMM states. When a given probabilistic event occurs, the state will transition to the next state in the HMM. Conversely, the state may self-loop rather than transition if a different probabilistic event occurs which does not imply transition.

As an example, consider the use of HMMs in the estimation of a signal x(t) from an observed signal y(t) which is contaminated with noise n(t):

(eq. 1.1)

From Bayesian theory, the posterior PDF of the signal x(t) is proportional to the product of the likelihood of observation y(t) given x(t), and the prior PDF function at value x(t):

Recall that the maximum a posteriori (MAP) estimate corresponds to the value in which the posterior function obtains a maximum value. The MAP is the value which minimizes the Bayesian risk function, which is defined as the cost error function averaged over all values of the . Using the given information and the previous principles of Bayesian theory, the maximum a posteriori estimate (MAP) can be obtained using the following equation:

(eq. 1.3)

To compute the MAP estimate in eq. 1.3, the PDF models for the signal and the noise signal

are needed. For applications involving time-varying signals such as speech, an N state HMM can be used to model the PDF of the non-stationary process via a Markovian chain of N stationary sub-processes, where each state is trained to model a unique section of a given process. For the problem of signal estimation, it is required that estimates of the state sequences of both the signal process and the noise process are obtained.

From a given observation sequence , the single most probable state sequences for the signal HMM

and noise HMM

are given in eq. 1.4, and eq. 1.5:

(eq. 1.4)

(eq. 1.5)

Once the most likely state sequences are obtained, the HMM can be applied to the calculation of the MAP estimation parameter for .