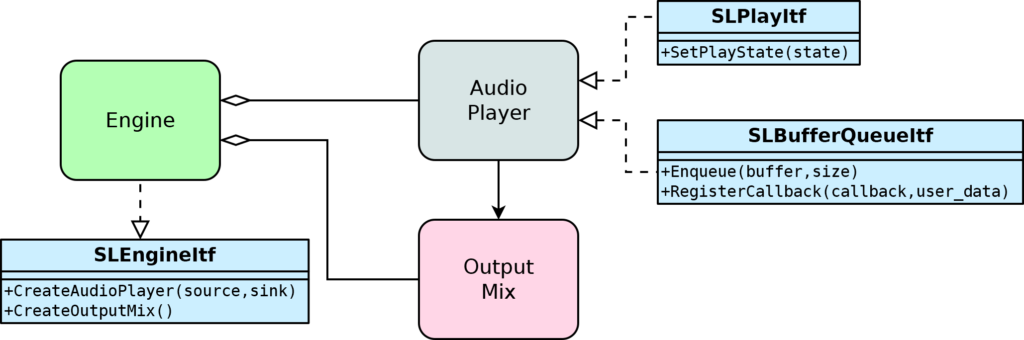

Most Android development is done using the Java language. The Java interface includes all the functionality needed to play and record audio in Android applications. However, there are cases where using a native library for audio is preferred, or maybe necessary for performance reasons. (A native library will execute directly on the CPU, rather than through the Java virtual machine.) For these situations, the Android NDK implements the OpenSLES audio API. OpenSLES is composed of objects and interfaces where multiple objects might implement the same interface. Objects needed for audio output are ‘Engine’, ‘Audio Player’ and ‘Output Mix’.

First the ‘Engine’ object is created, then the engine is used to create the other two objects. The ‘Audio Player’ object is created with source and sink specified. Source should be PCM audio, and sink should be the ‘Output Mux’ object. Once the audio player is configured, it can be realized. After it’s realized, it is possible to get the ‘SLBufferQueue’ interface and the ‘SLPlay’ interface. The ‘SLBufferQueue’ interface is used to send audio samples to the ‘Audio Player’ object to be played. It can also be used to register a callback that will run every time the ‘Audio Player’ object needs a new block of audio samples. The ‘SLPlay’ interface can be used to start and stop the stream. While playing, the ‘Output Mix’ object interacts with the Android system. Stream properties such as volume and audio route can be controlled using the normal AudioManager system.